There's currently a bill circulating in Congress over funding requirements for the NSF. Lamar Smith (whose name sounds so familiar, but I just can't remember when I've mentioned him before) wants to ensure that the tax payers are getting their money's worth when it comes to science. Derek Lowe, over at In The Pipeline has already written about this today...twice. This means, of course, that a much more competent and experienced blogger has already weighed in on this subject, but I'm going to add my thoughts anyways. The bill requires that each funded project be:

1) "…in the interests of the United States to advance the national health, prosperity, or welfare, and to secure the national defense by promoting the progress of science;Which may seem pretty innocent. After all, it sounds reasonable that if tax payers are footing the bill for a project they should expect to directly benefit from their investment. Unfortunately, these new requirements probably won't lead to higher quality research being funded. The NSF already had a requirement that grants show academic merit and broader impacts. New requirements will most likely just lead to scientists fluffing their proposals to ensure they meet the new requirements.

2) "… the finest quality, is groundbreaking, and answers questions or solves problems that are of utmost importance to society at large; and

3) "…not duplicative of other research projects being funded by the Foundation or other Federal science agencies."

I can only assume that this legislation is a reaction to what I lovingly call "Duck-penis-gate", the controversy that began about a month ago when conservatives criticized the NSF for funding research into duck penises. Giving $400,000 to let someone study duck penises sounds wasteful, but it's a necessary part of science.

Applied Research

Applied research asks the question: What practical uses are there for this? Applied research is important, and in most cases it's what the private sector does. Developing the newest iPhone advances our knowledge, but it's applied research. It seems to me that Lamar Smith sees all research as applied research. Either that, or he thinks that only applied research should be funded (I strongly disagree).

Basic Research

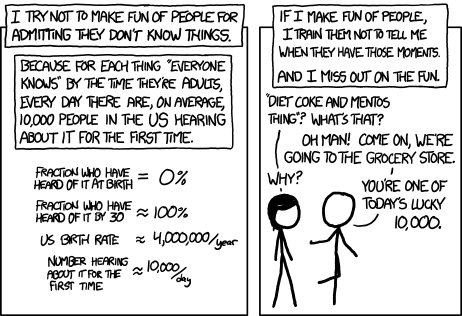

Basic research asks a much broader question than applied research. Instead of developing a single application researchers are simply extending the base of our knowledge. Things like duck-penis-gate are basic research. There are fundamental questions about the nature of the universe that can only be answered by basic research.

The point is, we don't know what research is going to lead to major advances. To quote Derek Lowe, we can't just "work on the good stuff" because, frankly, we don't know what will end up being the good stuff. If we already knew where to look to find interesting science we wouldn't be doing research at all. There's a quote by Isaac Asimov that sums up the importance of basic research nicely:

"The most exciting phrase to hear in science, the one that heralds new discoveries, is not Eureka! (I found it!) but rather, 'hmm... that's funny...'"Basic research can't promise to "[solve] problems that are of utmost importance to society at large" because we don't know what any one line of research will (or won't) bring us the next major discovery.

Edit:

After writing this I found this statement by President Obama:

"In order for us to maintain our edge, we’ve got to protect our rigorous peer review system"It's good to see that the President understands that the question of what research is "important" should be decided by a process of scientific review, not a political review.

Edit #2:

On Reddit I came across the following question:

"Someone explain to me why getting rid of expensive government research grants in favor of privatization is a bad thing."Which I think is a legitimate question (though a very unpopular one, based on the number of downvotes received). Here's the answer I gave:

"Private companies are great at applied research, but lousy at basic research.

Applied research seeks to develop technology and other applications based around known science.

Basic research seeks to push the limits of our understanding, but results are often more difficult or slower to get. That's because at the edge of our understanding we don't know what we don't know so we can't say what research will yield the "good" results. Private companies are not likely to invest in something without a direct, immediate, monetary gain available. However, humanity benefits when basic research is done. Therefore it should be done."